Analyze the sharded dataset in memory#

import lamindb as ln

import lnschema_bionty as lb

import anndata as ad

💡 loaded instance: testuser1/test-scrna (lamindb 0.54.3)

ln.track()

💡 notebook imports: anndata==0.9.2 lamindb==0.54.3 lnschema_bionty==0.31.2 scanpy==1.9.5

💡 Transform(id='mfWKm8OtAzp8z8', name='Analyze the sharded dataset in memory', short_name='scrna3', version='0', type=notebook, updated_at=2023-09-29 14:46:41, created_by_id='DzTjkKse')

💡 Run(id='J8w0qH8ZGFn49EehwWHM', run_at=2023-09-29 14:46:41, transform_id='mfWKm8OtAzp8z8', created_by_id='DzTjkKse')

ln.Dataset.filter().df()

| name | description | version | hash | reference | reference_type | transform_id | run_id | file_id | initial_version_id | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||

| nV0w72HVEfJeK6lgb7BO | My versioned scRNA-seq dataset | None | 1 | WEFcMZxJNmMiUOFrcSTaig | None | None | Nv48yAceNSh8z8 | Pd5UweAXC3cCH1aOMdr1 | nV0w72HVEfJeK6lgb7BO | None | 2023-09-29 14:45:51 | DzTjkKse |

| nV0w72HVEfJeK6lgb70T | My versioned scRNA-seq dataset | None | 2 | 0Uq1qU7xX7R6pyWN3oOT | None | None | ManDYgmftZ8Cz8 | 0eCAXKvC5AGTwRu42M0X | None | nV0w72HVEfJeK6lgb7BO | 2023-09-29 14:46:30 | DzTjkKse |

dataset = ln.Dataset.filter(name="My versioned scRNA-seq dataset", version="2").one()

dataset.files.df()

| storage_id | key | suffix | accessor | description | version | size | hash | hash_type | transform_id | run_id | initial_version_id | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||||

| Vf6s6oe8cTQ8oqMCCjeT | 975nKuX0 | None | .h5ad | AnnData | 10x reference adata | None | 660792 | a2V0IgOjMRHsCeZH169UOQ | md5 | ManDYgmftZ8Cz8 | 0eCAXKvC5AGTwRu42M0X | None | 2023-09-29 14:46:24 | DzTjkKse |

| nV0w72HVEfJeK6lgb7BO | 975nKuX0 | None | .h5ad | AnnData | Conde22 | None | 28049505 | WEFcMZxJNmMiUOFrcSTaig | md5 | Nv48yAceNSh8z8 | Pd5UweAXC3cCH1aOMdr1 | None | 2023-09-29 14:45:51 | DzTjkKse |

If the dataset doesn’t consist of too many files, we can now load it into memory.

Under-the-hood, the AnnData objects are concatenated during loading.

The amount of time this takes depends on a variety of factors.

If it occurs often, one might consider storing a concatenated version of the dataset, rather than the individual pieces.

adata = dataset.load()

The default is an outer join during concatenation as in pandas:

adata

AnnData object with n_obs × n_vars = 1718 × 36508

obs: 'cell_type', 'n_genes', 'percent_mito', 'louvain', 'donor', 'tissue', 'assay', 'file_id'

obsm: 'X_pca', 'X_umap'

The AnnData has the reference to the individual files in the .obs annotations:

adata.obs.file_id.cat.categories

Index(['Vf6s6oe8cTQ8oqMCCjeT', 'nV0w72HVEfJeK6lgb7BO'], dtype='object')

We can easily obtain ensemble IDs for gene symbols using the look up object:

genes = lb.Gene.lookup(field="symbol")

genes.itm2a.ensembl_gene_id

'ENSG00000078596'

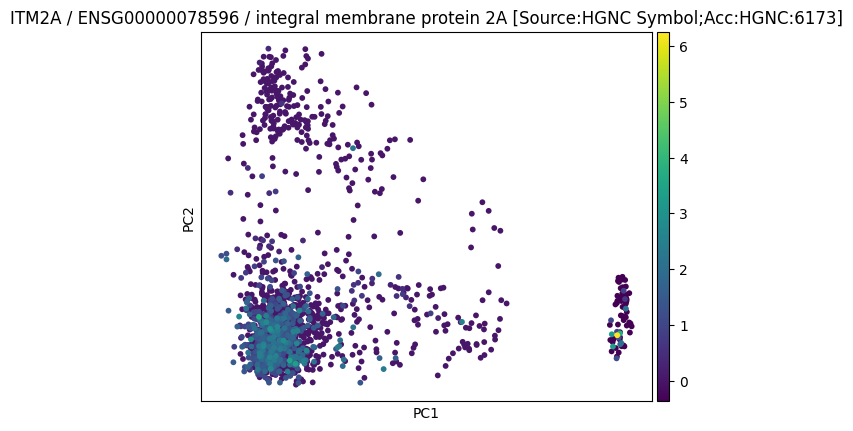

Let us create a plot:

import scanpy as sc

sc.pp.pca(adata, n_comps=2)

2023-09-29 14:46:45,341:INFO - Failed to extract font properties from /usr/share/fonts/truetype/noto/NotoColorEmoji.ttf: In FT2Font: Can not load face (unknown file format; error code 0x2)

2023-09-29 14:46:45,367:INFO - generated new fontManager

sc.pl.pca(

adata,

color=genes.itm2a.ensembl_gene_id,

title=(

f"{genes.itm2a.symbol} / {genes.itm2a.ensembl_gene_id} /"

f" {genes.itm2a.description}"

),

save="_itm2a",

)

WARNING: saving figure to file figures/pca_itm2a.pdf

file = ln.File("./figures/pca_itm2a.pdf", description="My result on ITM2A")

file.save()

file.view_flow()

# clean up test instance

!lamin delete --force test-scrna

!rm -r ./test-scrna

💡 deleting instance testuser1/test-scrna

✅ deleted instance settings file: /home/runner/.lamin/instance--testuser1--test-scrna.env

✅ instance cache deleted

✅ deleted '.lndb' sqlite file

❗ consider manually deleting your stored data: /home/runner/work/lamin-usecases/lamin-usecases/docs/test-scrna